Why do this?

The scenario: You want to play around with the fast-moving large language models, but don’t want to every random query / thought / personal health question to be logged / trained-on.

Requirements

You need to have a machine to run the LLMs. It needs to have lots of memory. Depending on the models, of course, but larger models are much better and useful. 16GB absolute minimum for a 7b-parameter model, and 27b models need 64G or more. I’m using an M4 Max Mac Studio with 128GB and that seems plenty.

The software is simple – ollama to manage and serve models, and the llm cli to run on your laptop. That way, the server does the lifting and your laptop doesn’t. They don’t, however, work out of the box, so I wanted to share some necessary steps.

Server setup with Ollama

On the server:

brew install ollama

brew services start ollamaNow we need some models. With lots of memory, I got the biggest models, but you should read the list to choose a few. This step pulls gigabytes, so beware on a metered connection.

ollama pull llama4:latest

ollama pull deepseek-r1-70b

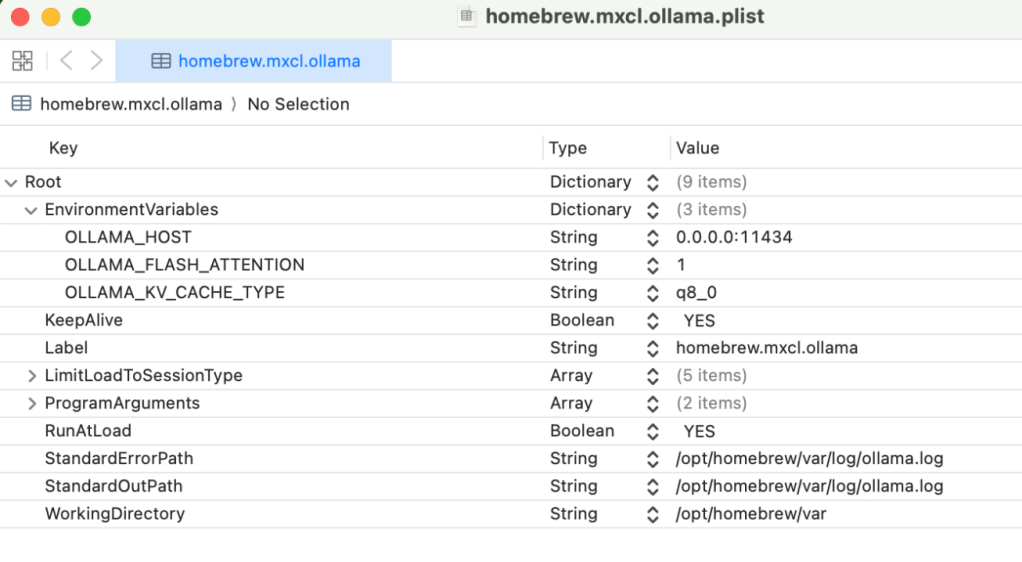

ollama pull gemma3:27bNow we need to tell Ollama to listen to all interfaces – this makes it accessible, since by default it binds only to localhost. There’s a GitHub issue about this if you want more details. We have to edit the plist:

open /opt/homebrew/opt/ollama/homebrew.mxcl.ollama.plist

As you can see, we have to add the OLLAMA_HOST environment variable, set to 0.0.0.0:11434

After that, save the plist and run

brew services restart ollamaTo test the change, open the new page in a browser. (I’m using hostname axiom, FYI.)

open http://axiom:11434/and you should see

That’s the server sorted – on to the client!

LLM client

The LLM cli by Simon Willison is just neat. It can do all sorts of things for you. We want to do the following:

- Install LLM

- Add our models using Ollama’s OpenAI compatible REST API to extra-openai-models.yaml

- Set the default model

- Set the sorta-not-needed API key (it’ll ask for it, give it the key ‘ollama’)

brew install llm

llm keys set ollama

llm keys pathSave that path – mine is

/Users/pfh/Library/Application Support/io.datasette.llm/keys.json

We need to create and populate

/Users/pfh/Library/Application Support/io.datasette.llm/extra-openai-models.yamlHere’s a ChatGPT-generated shell script to query Ollama over ssh and populate the extra-openai-models.yaml

#!/bin/bash

pushd /Users/pfh/Library/Application\ Support/io.datasette.llm

# Configuration

HOST="axiom.phfactor.net"

PORT="11434"

OUTPUT="extra-openai-models.yaml"

API_BASE="http://${HOST}:${PORT}/v1"

API_KEY_NAME="ollama"

# Fetch models from Ollama

echo "Fetching models from Ollama at ${HOST}:${PORT}..."

MODEL_LIST=$(curl -s "http://${HOST}:${PORT}/api/tags" | jq -r '.models[].name')

# Begin YAML

echo "# Auto-generated OpenAI-compatible model config for LLM CLI" > "$OUTPUT"

echo "# Source: http://${HOST}:${PORT}/api/tags" >> "$OUTPUT"

echo "" >> "$OUTPUT"

# Write each model as a separate YAML block

while IFS= read -r MODEL; do

MODEL_ID="${MODEL//:/-}" # replace colon with dash

cat <<EOF >> "$OUTPUT"

- model_id: $MODEL_ID

model_name: $MODEL

api_base: "$API_BASE"

api_key_name: $API_KEY_NAME

can_stream: true

supports_schema: true

vision: true

EOF

done <<< "$MODEL_LIST"

echo "Wrote $OUTPUT with $(echo "$MODEL_LIST" | wc -l) models."Here’s my models file:

# Auto-generated OpenAI-compatible model config for LLM CLI

# Source: http://axiom.phfactor.net:11434/api/tags

- model_id: qwen3-latest

model_name: qwen3:latest

api_base: "http://axiom.phfactor.net:11434/v1"

api_key_name: ollama

can_stream: true

supports_schema: true

vision: true

- model_id: deepseek-r1-70b

model_name: deepseek-r1:70b

api_base: "http://axiom.phfactor.net:11434/v1"

api_key_name: ollama

can_stream: true

supports_schema: true

vision: true

- model_id: llama4-latest

model_name: llama4:latest

api_base: "http://axiom.phfactor.net:11434/v1"

api_key_name: ollama

can_stream: true

supports_schema: true

vision: true

- model_id: gemma3-27b

model_name: gemma3:27b

api_base: "http://axiom.phfactor.net:11434/v1"

api_key_name: ollama

can_stream: true

supports_schema: true

vision: trueSet the default model – I’m still experimenting, but for now its

llm models default llama4-latestImportant!

The api_base there is not the one in the docs – I had to use ChatGPT (sauce for the gander) to figure out that you have to add the /v1 suffix or the llm cli will return 404s.

What do you get?

From my laptop, I can run random queries and it works great – no limits, ask anything (Lots of stories about this!) and I feel oddly better about asking basic, stupid tech question that I “should” know or remember. For example:

- What’s the makefile syntax for this?

- Tell me how to glob the largest non-zero-file of episode.mp3 in a tree of files?

- What’s the jq invocation to pull this?

llm 'nmap syntax to look for open tcp on a single host'

To use `nmap` to scan for open TCP ports on a single host, you can use the following basic syntax:

```bash

nmap -sT <hostname or IP address>

```

Here's a breakdown of the options used:

- `-sT` specifies a TCP connect scan, which is a type of scan that tries to establish a full TCP connection to the target ports. This is one of the most common scan types and works well for finding open TCP ports.

If you want to scan all 65,535 TCP ports (which can take a long time), you can use:

```bash

nmap -sT -p- <hostname or IP address>

```

However, for a more efficient and commonly used approach, scanning the top 1000 most common ports can be sufficient for many cases:

```bash

nmap -sT -p 1-1000 <hostname or IP address>

```

- `-p 1-1000` specifies the range of ports to scan. Adjust this range according to your needs.

### Additional Options

- **OS Detection:** `-O` (capital O, not zero) can help identify the operating system of the target, though this might not always be accurate or useful.

- **Version Detection:** `-sV` can provide information about the versions of services running on open ports.

- **Script Scanning:** `--script` can run NSE (Nmap Scripting Engine) scripts against the target.

### Full Command Example

For a more detailed scan:

```bash

nmap -sT -p 1-1000 -sV -O <hostname or IP address>

```

Make sure to replace `<hostname or IP address>` with the actual hostname or IP address of the target you're scanning.

### Permissions

Keep in mind that running `nmap` might require administrative or root privileges, especially for certain types of scans. If you run into issues, consider using `sudo` (on Unix-like systems) before your `nmap` command.

### Legal and Ethical Considerations

Always ensure you have permission to scan a target. Unauthorized scanning can be considered malicious and may lead to legal consequences.Postscript

I opened an issue on the LLM cli, so their docs may be updated to document these steps.